|

|

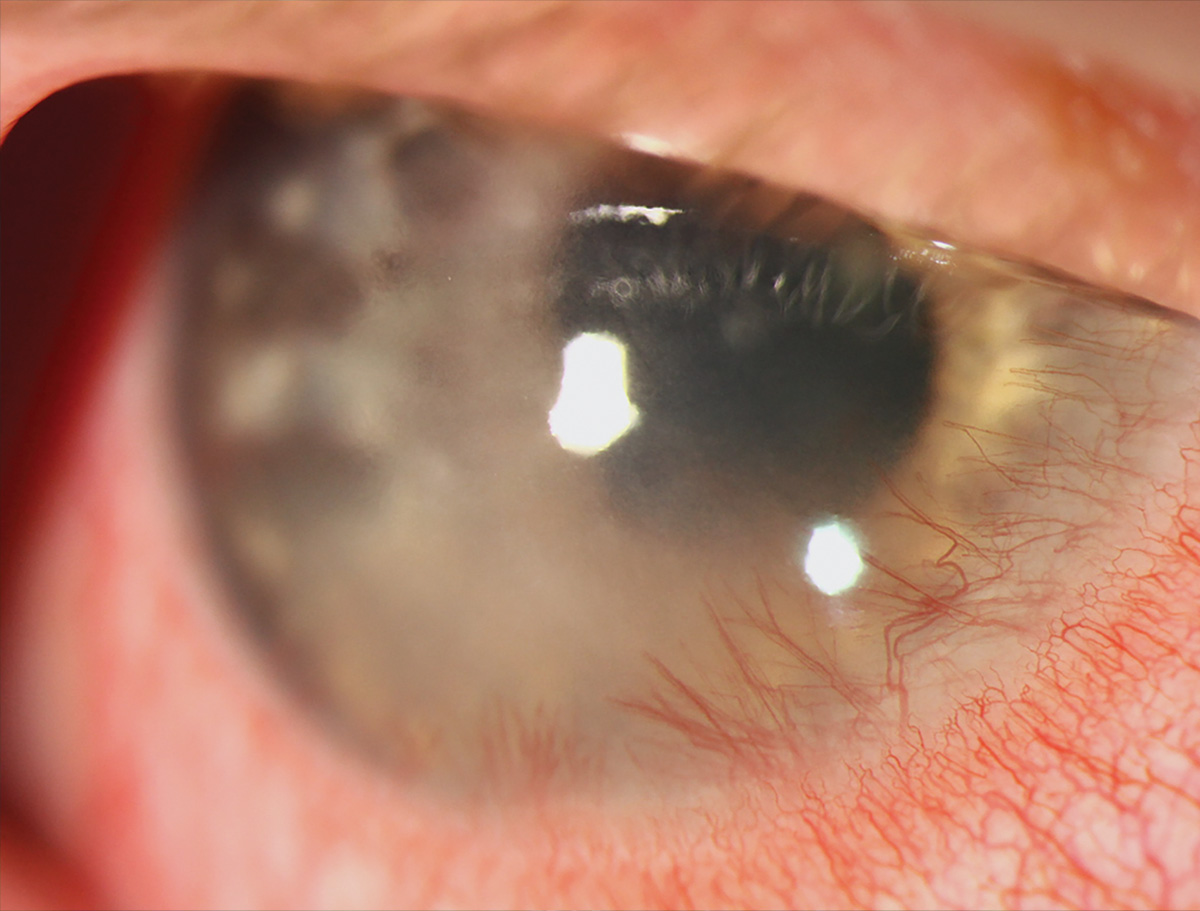

When tasked with diagnosing 20 cases of corneal diseases, ChatGPT-4.0 had an 85% accuracy rate compared to ChatGPT-3.5’s 60%, showing the AI model is improving. However, these results didn’t meet the accuracy of the three human corneal experts included, who were 100%, 90% and 90% accurate, respectively. Photo: Alison Bozung, OD. Click image to enlarge. |

A week after a report found ChatGPT superior in some ways to human beings in handling glaucoma and retina questions, another study also gives it good marks in aiding corneal disease care, though in partnership with doctors rather than as a replacement. Artificial intelligence has been increasingly integrated into various medical disciplines since its inception, including ophthalmology. However, its uptake has been slower in the anterior segment realm, given the complexities of corneal eye diseases. That may be changing as AI models improve with each iteration, and a recent study in the journal Cornea found that ChatGPT combined with human expertise may unlock its full potential.

Researchers compared the capabilities of ChatGPT-4.0 and ChatGPT-3.5 with those of three human corneal specialists. They were given 20 randomly selected cases of corneal diseases, including corneal infections, dystrophies and degenerations from a publicly accessible online database from the University of Iowa. The text of each description was input into the two ChatGPT models and researchers asked each to provide a provisional diagnosis. The results showed that ChatGPT-4.0 was more accurate than ChatGPT-3.5.

ChatGPT-4.0 provided an 85% accuracy rate (17 correct of 20 cases), whereas ChatGPT-3.5 achieved 60% accuracy (12 correct of 20). The accuracy of the three human corneal specialists compared to both AI models was 100%, 90% and 90%, respectively. When they combined the diagnoses of ChatGPT-4.0 with those of the corneal specialists (interobserver agreements), the rates were 85%, 80% and 75%, respectively. The interobserver agreement when it involved ChatGPT-3.5 was only 60%.

It was noted that the human experts were more accurate on rare cases in the study. Both ChatGPT versions were incorrect on two rare cases including infectious crystalline keratopathy and lattice corneal dystrophy type II, but human experts were correct.

This study sheds valuable light on the benefits of integrating AI into corneal services, stated the authors, namely ChatGPT’s ability to quickly process large amounts of medical data. In this study, it took the AI models a couple of minutes to diagnose all 20 cases, whereas the human specialists took 20 to 40 minutes total. “[This] enhances diagnostic speed and efficiency, leading to quicker patient management and consistent identification of underlying conditions,” they said.

The authors cited limitations of ChatGPT itself, as well as their own study. First, for ChatGPT, they said its accuracy “lies on the quality and diversity of the training data it has been exposed to until September 2021. Therefore, the model may encounter challenges when faced with rare or emerging corneal conditions that lack representation in its training data set.” They added that ChatGPT should always be validated based on clinical evaluations since its insights can sometimes be derived from nonscientific and publicly available knowledge.

Regarding their study, the authors say follow-up studies based on a larger number of cases would help verify these findings. “However, obtaining larger databases with a greater number of case reports is highly challenging and requires larger multicenter and multidisciplinary collaborations,” they stated. “One major obstacle, however, is ethical considerations and data privacy issues. The utilization of patient data for diagnostic purposes raises concerns about data security and patient confidentiality.” As has been a concern with the expanding use of AI, the authors urge rigorous safeguards and compliance to ensure ChatGPT’s responsible and ethical use.

“As AI continues to reshape health care, the potential of ChatGPT to enhance diagnostic accuracy, expedite patient care, empower medical education, and stimulate research is evident,” concluded the authors. “Although challenges exist, a balanced approach that combines AI-generated insights with clinical expertise holds the key to unlocking the full potential of ChatGPT for the diagnosis of corneal conditions.”

Delsoz M, Madadi Y, Munir WM, Tamm B, Mehravaran S, Soleimani M, Djalilian A, Yousefi S. Performance of ChatGPT in diagnosis of corneal eye diseases. medRxiv. August 28, 2023. [Preprint] |